Artificial intelligence and machine learning (AI/ML) have already made their way into the healthcare sector, and their presence is increasingly evident. This is a positive development, as the medical care system in the US is still lagging behind in terms of technology. For instance, it’s surprising that even in the year 2024, doctors’ offices still sends fax messages!

All stakeholders in the healthcare industry agree that it’s time for an upgrade to the digital age. Many repetitive tasks can be automated, and AI/ML can be utilized to achieve excellent patient outcomes and improve population health.

However, unlike other industries such as entertainment, retail, and advertising that have already benefited from AI, healthcare poses unique risks with AI/ML. Any technological failure or malfunction in healthcare can have severe consequences, putting lives and limbs at risk.

To mitigate these risks, appropriate regulations are necessary to protect patients from the potential dangers of unsafe and overhyped technologies.

This is especially important because, historically, the digital health industry has rarely self-regulated in our profit-driven society.

Ensuring Safety: The US Food and Drug Administration’s (FDA) Oversight of AI/ML Enabled Medical Devices

The regulation of AI/ML digital health tools falls under the jurisdiction of the FDA. The FDA reviews healthcare AI/ML-enabled tools, considering them as software medical devices (SaMD)[1].

The FDA determines whether a device requires pre-market approval and review is based on its intended use and risk level. According to the FDA, a device is defined as a tool intended to treat, cure, prevent, mitigate, or diagnose a disease.

Device risk levels are classified as Class I (low risk), Class II (moderate risk), and Class III (high risk)[2]. Examples of these risk levels include bandages (Class I), pregnancy test kits (Class II), and cardiac pacemakers (Class III).

The FDA’s determination of a device’s risk level is a legally binding regulation for any device that meets the requirements.

There are three major pathways to FDA approval based on the risk level. The most rigorous review process is the pre-market approval (PMA), which is reserved for high-risk devices.

The pre-market notification (510K) pathway is for moderate-risk devices that have a similar device already on the market [3]. The least stringent approval process is the DeNovo pathway for low-risk devices.

For AI-enabled medical devices specifically, the FDA does not currently have legally binding regulations covering their approvals. Since 2019, the FDA has been producing multiple guidance documents [4] and recommendations regarding good machine learning principles.

The aim is to get feedback from all stakeholders leading up to an appropriate comprehensive regulation for health AI devices.

It is worth noting that AI device approval is unique in the sense that every developer needs to submit a document detailing the possible future tweaks to the model that powers an AI device. This document is called a pre-determined change control plan (PCCP)[5].

The PCCP is an amazing concept that allows for tweaks and changes in an AI model during its lifecycle without having to undergo a fresh review from the FDA, as long as the modifications are within the scope of the submitted PCCP.

FDA’s Laudable Achievements So Far

So far, the FDA has reviewed over 800 AI/ML-enabled devices and approved around 200 of them. This is very commendable as the FDA struggles to keep up with the ever-dynamic nature of AI/ML innovations.

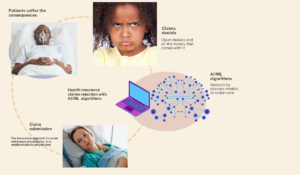

It is also reassuring that the FDA does not mind pushing beyond their regulatory boundaries, as determined by the US Congress, in the interest of patient and population safety. A particular situation was the FDA calling for regulatory review and pre-market approval of sepsis algorithms embedded in electronic health record systems(EHR).

There were credible reports that some prominent sepsis algorithms were harmful and yielding dangerous outcomes[6]. However, many EHR vendors, and Congress were quick to oppose the FDA and caution them that they were engaging in regulatory overreach and could not mandate clinical decision software like the sepsis algorithm to undergo pre-market review[3].

The reason for this callout by Congress was based on the fact that some EHR-embedded software similar to the sepsis algorithm were exempt from the software as a medical device designation by the 21st Century Cures Act.

Nevertheless, we agree with the FDA’s decision to regulate algorithms embedded in the EHR because the exemption of such algorithms at the time of the enactment of the 21st Century Cures Act did not consider the dynamic and risky nature of complex algorithms.

Algorithmic complexity has increased over time, and algorithms like large language models (e.g., ChatGPT) were not readily in circulation at the time of the enactment of the 21st Century Cures Act exemption.

The PCCP program of the FDA is an excellent achievement proposed by the FDA in an attempt to prevent the agency from hindering innovation in its dynamic state while also ensuring the safety of AI tools.

As far as the FDA does not have specific legally enforceable regulations targeting AI-enabled medical devices, AI developers in the EHR space will continue to have a free “ticket” to implementing AI tools without concern for safety and effectiveness

Ahmed-Digital Doc Tweet

FDA Oversight Gap

The oversight gaps are based on the fact that the FDA currently lacks specific regulations for AI-enabled medical devices. The FDA only provides guidance documents and recommendations that are not legally enforceable[3]. This allows innovators and AI developers to produce and implement tools without sufficient consideration for safety and effectiveness.

Furthermore, the FDA’s device regulations paradigm was not designed for adaptive AI/ML-enabled devices, resulting in a significant gap in oversight for the implementation of these devices[A1].

The congressional exemption of certain software devices, such as EHR-enabled AI devices, also hampers FDA oversight and has become outdated in the rapidly evolving AI/ML world.

Another oversight gap lies in the FDA’s need to emphasize that developers must demonstrate efforts to prevent AI/ML from exacerbating health disparities caused by biased training data during the pre-market submission process.

Additionally, the environmental impact of a tool should be a metric considered by the FDA during their review. These algorithms consume substantial amounts of energy, and their environmental impact often goes unnoticed amidst the hype and excitement[7].

Congressional efforts towards AI/ML enabled device regulation

This falls under the oversight of the congressional AI task force headed by Representative Jay Obernolte (California). The task force is also working towards producing AI regulations.

We also recommend that the task force includes regulations binding any health AI/ML developer or institutions sponsored by taxpayer money, through the NIH or any other governmental grant. These regulations should require efforts to prevent algorithmic exacerbation of health disparities and attempts to reduce adverse environmental impacts of models[7].

Deep dive into the ideological differences between US AI regulations and European Union(EU) regulations:

The GDPR and the AI acts are the laws governing AI in the EU, both of which have overlapping and complementary statutes. The GDPR, established in 2018, enforces individuals’ rights regarding the processing of their personal data and protects them from decisions based solely on automated processes[8].

In July 2024, the Council of the European Union enacted laws specifically to regulate the use of AI, creating the first comprehensive AI legal framework.

This framework prioritizes reducing AI risks and ensuring safety, transparency, non-discrimination, and traceability. It also emphasizes the need for human oversight of AI systems, as outlined in the EU’s AI act[9,10].

According to Representative Obernolte, the head of the AI congressional task force, his task force acknowledges the differences between the EU environment and the U.S. system.

The U.S. system of health, with its diverse population, contrasting stakeholders, layers of regulations, and state autonomy, makes a “one-size-fits-all” law like the GDPR/AI act ineffective in the U.S. Keeping this in mind, the final U.S. AI regulations will consider not only the safety/risk profile of an AI tool but also its potential outcome.

Digital health 360 degrees lens

Implementing AI/ML in healthcare offers potential benefits but also comes with significant risks. We need robust regulations that strike a balance between providing guardrails for AI/ML-enabled devices and avoiding hindrances or stifling of innovation.

Currently, the FDA regulates AI/ML-enabled devices under the designation of software as a medical device (SaMD). The FDA’s device regulation paradigm falls behind the dynamic nature of AI/ML-enabled devices.

Additionally, the FDA only provides guidance documents and recommendations for AI/ML-enabled devices, which are not legally binding or enforceable. Despite this oversight gap, the FDA has reviewed over 800 medical AI devices and approved approximately 200 of them.

Certain exemptions to FDA review outlined in the 21st Century Cures Act, which were previously useful, now hinder the FDA’s oversight responsibilities in preventing harm from medical AI/ML-enabled devices.

There is also a congressional task force on AI working to enact laws that will govern AI implementations in the U.S. Unlike the European Union’s GDPR and AI act , which are primarily risk-based, the congressional task force aims to produce a law that is both outcome-based and risk-based.

This law will cater to the diversity of U.S. stakeholders while also fostering AI innovation.

The future of AI regulation in the U.S. is currently uncertain and is likely to remain so for a considerable time.

The complex interplay of multiple stakeholders in a highly diverse and unequal healthcare delivery system, combined with the ever-evolving nature of AI/ML development, presents one of the greatest challenges in ensuring public health safety.

We believe that state autonomy will play a significant role in determining how AI regulations unfold in the future.

Different states will enact laws based on their population needs and healthcare philosophy, all in an effort to balance population health safety with the promotion of innovation and profit.