Algorithmic bias occurs when the application of an algorithm heightens both new or previously identified contemporary inequities in health outcomes.

It replicates new or existing health inequity on a massive scale without adequate oversight[1].

This large-scale algorithmic replication of our society’s health disparity can compound existing disadvantages of different groups based on race, gender, religion, sexual orientation, geographical area, and disability status[2].

Algorithmic bias is not a static phenomenon. Likewise, it is not solely rooted in the technical development of an algorithm; instead, its fundamental origin lies primarily in our societal bias and inequity that sips into the algorithms.

These algorithms, for the most part, function appropriately and are not to blame for their output.

This write-up explains the sources of algorithmic bias in healthcare and the pathways upon which our societal health deficiencies in the form of bias sip into the “innocent” algorithms.

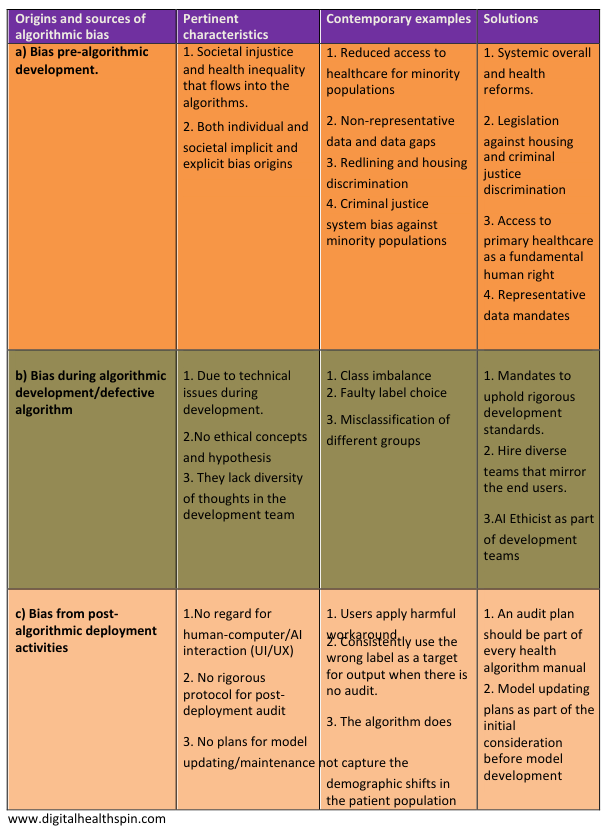

Healthcare algorithmic bias is ubiquitous in its development process, and can originate from: data sourcing before deployment, algorithmic development, implementation, and post-deployment activities.

Ahmed-digital-doc

Tweet

Uncovering the various forms of healthcare Algorithmic bias

1. Complex healthcare algorithmic bias

Data scientists create these complex algorithms with colossal training datasets, a.k.a big data.

The dataset and the process of achieving these “sci-fi” type output from these models have the characteristics of massive volume, high velocity, and the ability to cope with various data points all at once.

These characteristics distinguish big data applications from traditional statistical methods. They come in the form of conventional AI applications and deep learning models.

Examples are models to predict hospital readmissions, patients who would not attend clinical appointments, and algorithms to diagnose conditions like skin cancer from pictures.

2.Simple healthcare algorithmic bias

These are algorithms built with mostly traditional statistical methods without the scale of data used in big data applications. Instead, clinicians apply them as simple calculators in clinical decision-making.

Some are embedded directly in digital tools like electronic health records at the point of clinical decision-making.

For example, the algorithm for calculating kidney function, pre-operative cardiovascular assessment, and morbidity assessment for patients admitted to the emergency room.

However, complex healthcare algorithms are getting more attention due to the hype around their Alexa type® “intelligence.” Add on the jaw-dropping abilities of large language models(LLMs).

Nevertheless, complex algorithms are not as widely used in healthcare as simple ones because of many safety and regulatory concerns or lack thereof.

Breaking It Down: Simple vs. Complex Healthcare Algorithms Demystified

The fundamental difference is that we develop simple algorithmic calculators primarily from peer review processes. In contrast, complex algorithms’ outputs do not include much understanding of their inner workings (they lack explainability).

It’s important to mention that simple algorithms also have limitations and biases. However, they are more trusted by clinicians in their clinical decision-making because their output is explainable and understandable(no black box phenomenon)

Delving deeper into the sources of AI/ML algorithmic bias

Most of the origins of algorithmic bias usually predate the technical aspects of algorithmic development.

Even when an algorithm is perfect upon deployment, other sources of bias pre and post-deployment can still result in biased outcomes.

In essence, bias can originate from all the stages of algorithm development: data sourcing before deployment, algorithmic development and implementation, and post-deployment activities.

a. Pre-algorithm development bias

- Societal inequality and systemic racism

Racial health inequality, gender bias, and discrimination based on sexual orientation and age are all forms of societal bias that may end up in an algorithm. This issue may be subtle and complex to tease out just by looking at datasets.

For example, ethnic minorities in the US tend to have more severe depressive disorder than their white counterparts. However, they are less likely to be prescribed anti-depressants [3].

The reason for this is grounded in reduced access to healthcare by minority populations in the US. It takes a reasonable amount of health access to be prescribed anti-depressants by a doctor because they are prescription-only medications.

Therefore, any algorithm built on only this premise will erroneously produce output that seems to infer that minority patients have less severe depressive disorders due to less consumption of anti-depressants.

In terms of gender bias in society, health professionals are primarily biased in underestimating levels of pain in women compared to men.

Likewise, a pain algorithm will likely yield a false output regarding predicting pain levels with fatal consequences such as missing a heart attack in a female patient.[4]

- Non-representative data and data gaps

For a health algorithm to be broadly helpful and generalizable in a highly diverse population like the US, you have to train it with data that mirrors the diversity of the target population. Otherwise, it risks producing dangerous and biased output.

Although the advent of open databases and registries for algorithmic development is desirable, there is a sinister part to their existence.

First, most of the data in them do not represent target populations as they mostly mirror people of Caucasian ancestry who can afford health access.

For example, despite the potential benefits of genomic advancement in personalized care, most genomics databases contain primarily data from people of European ancestry [5].

Moreover, even closed systems like algorithms built from various health systems’ electronic health record systems (EHR) are also prone to multiple biases based on inaccuracies and low quality of data collection.

For example, it is a best practice requirement to capture a patient’s sexual orientation. However, our routine clinical practices largely ignore this.

It implies that algorithms built on EHR data will be biased against non-heterosexual patients[6].

Additionally, most of the data used to build clinical algorithms in the US are not geographically representative. Most data are from only three states: California, Massachusetts, and New York[7].

b. bias due to defective model during algorithm development

Although the technical details required to prevent bias during algorithm development are beyond this write-up’s scope, I will highlight the simplest form of technical bias here.

1.Class imbalance: One common cause of technical bias is a class imbalance that allows algorithms to replicate the patterns in the dominant category inherent in its training data.

For example, you can train an algorithm to predict pneumonia with X-ray data containing images of 80% healthy people and 20% pneumonia patients. The algorithm will be 80% likely to be right by predicting that every image is healthy.

Data scientists can overcome such imbalance problems with alternative evaluation metrics like the F score[8].

2.Label choice: Occurs when there is a mismatch between the ideal target for prediction and the actual proxy target the algorithm predicts.

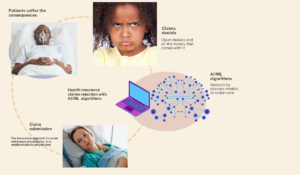

For example, Optum inadvertently created the famous biased algorithm to predict patients with high future healthcare needs. The aim was to set up social interventions that may reduce the future needs of the patients identified as high utilizers.

However, a proxy target used in the algorithm was healthcare cost, which was a label choice bias. As a result, the model produced biased discriminatory output against minority populations[9,10].

The reason was that healthcare cost as a proxy for future health needs means that many minority populations without health access are not captured in the data. As such, the algorithm erroneously considered them to have low future health needs.

3.Other issues that can result in bias during model development include systematically misclassifying different groups and not ensuring that algorithmic predictions are not statistically independent from protected groups, like ethnic minorities, based on gender or sexual orientation[11].

c. Bias from faulty post-algorithm deployment activities

Even after successfully deploying a perfect algorithm, biased outcomes from algorithms can still occur. Post-deployment bias results from not upholding or keeping up with best practice standards after model deployment.

1.Algorithm deployment without regard for the human-algorithm interaction

Generally speaking, the change process is not fun, and people in an organization will naturally resist any drastic changes to their workflow. This resistance to change is more evident in the healthcare sector as it lags behind contemporary technological adoption relative to other industries.

Implementing an algorithm in an organization without implementing adequate change management procedures will likely result in a biased algorithmic output.

This results from human interaction with the algorithm in resistance to the new status quo. It may result in the wrongful application of the model and jeopardize the indications for use.

Many individuals can also bypass steps or processes of adequately using the algorithms. These “workarounds,” already very common with EHR use, can result in faulty algorithmic output.[12].

2.Lack of a standard protocol for post-implementation algorithmic audit

Algorithms can change the dynamic of caring for patients after implementation. Likewise, using the wrong labels as proxies for target outcomes is easy. Unfortunately, these outcome deficiencies mostly become evident after the model is in full production mode.

It is vital to have a well-laid-out periodic assessment of the algorithm. The audit frequency should depend on the nature of the algorithm and how dangerous its prediction can be if it goes wrong.

3. Deficiency in the plan for algorithmic maintenance and model updating

Although having a well-laid-out protocol for algorithmic audits is a good start, it is equally important to implement all the corrective measures found during the audit phase.

It becomes futile when the audit phase identifies problems without resources or specific ways to resolve them.

Model updating ensures that the model continues to produce unbiased output even when there is a systemic change in the training dataset. For example, with the increase in senior citizens, the population’s age demographics may change from the predominantly younger individuals in the training data.

You must continuously update the model to produce optimal results due to the significant change in training data characteristics.

Other examples of changes that need model updating are changes in practice standards/clinical recommendations, changes in payer mix (private insurance vs. Government), changes in the number of uninsured, etc.

Digital health 360 degrees lens

Healthcare algorithms are here to stay. However, these healthcare models remain loosely regulated despite their potential for good and also for evil if not correctly implemented.

Algorithmic bias amplifies individual or societal bias and is the bane of our existence with a wrongfully executed model.

Bias can originate from any stage in the process of algorithm development. For example, it can arise from the pre-conception, model development, and post-implementation phases.

These models tend to automate existing health inequity and institutional racism. This is the most significant source of algorithmic bias and requires the highest heavy lifting.

We would need to overhaul our health system and prioritize other social determinants of health to prevent this scourge.

A good start is by increasing health access to all and working our way through improving other social determinants of health.

Data quality, gaps, and lack of adequate post-implementation audit are other equally important sources of health algorithm bias.

In fact, amongst all the various forms of healthcare AI bias, data-related types are the low-hanging fruits.

However, there are practical solutions to this problem if we have the will to apply them.

The technical optimization of the algorithm itself appears to be the least difficult source of bias to overcome.

Primarily because the algorithm is not to blame for algorithmic bias; instead, the humans behind the algorithm are the culprits.